Writing test cases is one of the major and most important activities which any tester performs during the entire testing cycle. The approach for writing good test cases will be to identify, define and analyse the requirements.

When you begin writing the test cases, there are few steps which you need to follow to ensure that you are writing good test cases:

1. Identify the purpose of testing. You need to understand requirements to be tested. The first step is to define testing purpose.

When you start writing test cases for any software module, you must understand the features of the same and user requirements.

2. The second step is to define how to perform testing. This will include defining Test Scenarios.To write good test scenarios you should be well versed with familiar with the functional requirements. You need to know how software is used covering various operations.

3. Identify Non-funtional requiremts.The third step is to understand the other aspects of software related to non-functional requirements such as hardware requirements, operating system, security aspects to be considered, and other prerequisites such as data files or test data preparation. Testing of non-functional requirements is very important. For example, if the software requires a user to fill in the form, proper time-out logic should be defined by the developer to ensure that it should not result in time-out while submitting the form once user has filled in all required information. Simultaneously, under the same scenario tester should also ensure that user is getting logged-off after certain defined delay to ensure security of the application is not breached.

4. Fourth step would be to define a framework of test cases. The framework of test cases should cover UI interface, functionality, fault tolerance, compatibility, and performance of several categories. Each category should be defined in accordance with the logic of the software application.

5. Next step would be to become familiar with the modular principle. It is easy to analyze the relevance of the software modules present in the specified application. However, it is very important to understand the coupling between the modules. It is very important to test the "Mutual influence" of modules.

The test cases should be designed to cover influence of any module on other modules of the application. For example, in online shopping software while testing shopping cart and order checkout you need to also consider inventory management and validate if the same quantity of the purchased product is deducted from the stores. Similarly, while testing returns, we need to test its effect on the financial part of application along with store inventory.

Structuring of Test cases

Now you have all the required information to begin writing test cases. We will talk about the structure of a test case. Requirements of the software are mapped with test scenarios, which are further elaborated in test cases. For each test scenario, we define test cases. In each test case, we define Test Steps. Test Step is the smallest entity under any test case. It specifies the action to be performed, Expected result of the test application.

The format of a test case comprises of:

1. Test Case ID ( This is the unique number which helps in identifying a specific test cases)

2. Module to be tested (Usually we provide Requirement ID to maintain traceability between test case and requirements)

3. Test Data ( We provide variable and values based on need of the test case)

4. Test Steps ( Steps to be executed)

5. Expected results ( How application should behave after performing stated test steps)

6. Actual results ( Actual output tester will get after preforming steps)

7. A result ( Pass or fail after comparing expected and actual results)

8. Comments ( We can provide screen shot or any other relevant information to help developer debug the code)

During testing you will mark your results against each step, and the defect report will provide related test case ID, which failed during execution. This can help a tester to relate back to requirements and understand the business scenario which needs to be fixed in code.

For writing test cases you can use simple xls file or tester can select from a wide variety of tools already available. There are few tools such as Quality Centre, Test Director which tester can avail after paying license cost, or you can avail open source tools such as bugzilla.

The test cases can be written with great details, including a large number of steps, or you can also write relatively simple test cases. I personally do not agree with the approach of a large number of steps to be included in test cases.

Here are my thoughts on how a tester can write effective test cases:

1. Self-explanatory and specific – test cases should have sufficient details so that even a new tester can execute the same without any help. All the pre-requisite which are required to execute a specific test should be mentioned in the tests itself. Further, it should clearly specify the purpose and scope of their steps.

2. Valid and concise – test cases should have all designated steps to test based on expectations of the testing. It should not have unnecessary steps. If there are too many test steps in a single test case to be performed the tester may lose focus and aim.

3. Traceable – test cases should cover all the requirements of the software, and every test case should be mapped with “Requirement ID." This helps in ensuring that testing is providing 100% coverage to complete requirements and tester is performing testing for all requirements. Further, it also helps in impact analysis.

4. Maintainable – with the changes in requirement, tester should be able to easily maintain the test suite of test cases. It should reflect the changes in software and accordingly steps should be modified.

5. Positive and negative coverage– test cases should test for boundary values, equivalence classes, normal and abnormal conditions. Apart from testing for expected results, the negative coverage can help in testing failure conditions and error-handling.

6. Coverage for Usability aspect – Test cases should include testing for UI interface from the aspect of ease of use. The overall layout and color should be tested against a style guide, if any defined for the software application under testing or should be tested against the signed off mock- up designs. Basic English punctuations, spellings, drop-down list categorizations such as depended pick lists should be covered.

7. Test Data – there should be the diversity of the data which should be used in test cases such as - Valid data, Legitimate invalid data (to test boundary value), Illegal and abnormal data ( to test error handling and recovery).

8. Non-Functional aspect – the test cases should cover scenarios for basic performance testing of the application such as Multi-user operation, capacity test. It should cover security aspects such as user permissions, logging mechanism. Test cases for Browser support in case of web application.

To summarize, the test cases should first be able to cover all the functional requirements, and then we should also include the test cases which are related to non- functional requirements as they are equally important for the proper functioning of the software.

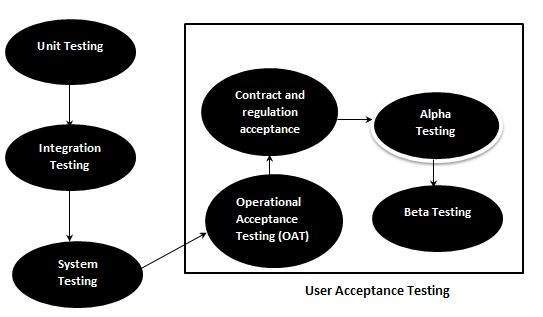

The acceptance test cases are executed against the test data or using

an acceptance test script and then the results are compared with the

expected ones.

The acceptance test cases are executed against the test data or using

an acceptance test script and then the results are compared with the

expected ones.